Prompt Design at Character.AI

Author: James Groeneveld

Github: https://github.com/character-ai/prompt-poet

PyPi: https://pypi.org/project/prompt-poet/

At Character.AI, mastering the art and science of Prompt Engineering is crucial. Constructing prompts in production involves considering a wide array of data and factors: current conversation modalities, ongoing experiments, the Characters involved, chat types, various user attributes, pinned memories, user personas, the entire conversation history and more. Given the billions of prompts we construct per day, the need to maximize the use of expanding LLM context windows, and the diversity of our use cases, a robust and scalable approach to prompt design is essential. We advocate transitioning from traditional 'prompt engineering' to 'prompt design'—a shift that moves us away from tedious string manipulations towards designing precise, engaging prompts. This post introduces Prompt Poet, a solution we've developed to do just that.

Brief Overview

Python f-strings (and wrappers around them) are now the industry standard for Prompt Engineers. Using f-strings can be as simple as adding a user's query directly into a string. However, it can also become very complex, involving a lot of manual string manipulation to create the final prompt. It also makes prompt iteration less accessible to non-technical individuals, as it requires writing code.

We believe there's a better way. That's why we created Prompt Poet (Github / PyPi), a tool that allows both developers and non-technical users to efficiently design and manage their production prompts. It saves time on engineering string manipulations, enabling everyone to focus more on crafting the optimal prompts for their users.

Borrowing from the world of UI design, we consider a prompt P as a function of runtime state–including the prompt template, data, token limit and more.

Basic Usage

import os

import getpass

from prompt_poet import Prompt

from langchain import ChatOpenAI

# Uncomment if you need to set OPENAI_API_KEY.

# os.environ["OPENAI_API_KEY"] = getpass.getpass()

raw_template = """

- name: system instructions

role: system

content: |

Your name is {{ character_name }} and you are meant to be helpful and never harmful to humans.

- name: user query

role: user

content: |

{{ username}}: {{ user_query }}

- name: response

role: user

content: |

{{ character_name }}:

"""

prompt = Prompt(

raw_template=raw_template,

template_data={

"character_name": "Character Assistant",

"username": "Jeff",

"user_query": "Can you help me with my homework?"

}

)

prompt.messages

>>> [{'role': 'system', 'content': 'Your name is Character Assistant and you are meant to be helpful and never harmful to humans.'}, {'role': 'user', 'content': 'Jeff: Can you help me with my homework?'}, {'role': 'user', 'content': 'Character Assistant:'}]

model = ChatOpenAI(model="gpt-4o-mini")

response = model.invoke(prompt.messages)

response

>>> AIMessage(content='Of course, Jeff! I’d be happy to help you with your homework. What subject are you working on, and what do you need assistance with?', response_metadata={'token_usage': {'completion_tokens': 31, 'prompt_tokens': 47, 'total_tokens': 78}, 'model_name': 'gpt-4o-mini-2024-07-18', 'system_fingerprint': 'fp_0f03d4f0ee', 'finish_reason': 'stop', 'logprobs': None}, id='run-5fff6ab5-5dee-40d8-b11c-7a9637406c36-0', usage_metadata={'input_tokens': 47, 'output_tokens': 31, 'total_tokens': 78})Prompt Templates

With Prompt Poet, the time you once spent on prompt engineering can now be dedicated to prompt design, allowing you to iterate on templates rather than code. These templates use a mix of YAML and Jinja2, making them both flexible and easy to combine. This approach empowers both developers and non-technical users to efficiently create and manage prompts. Template processing occurs in two primary stages:

- Rendering: Initially, Jinja2 processes the input data. During this phase, control flow logic is executed, data is validated and appropriately bound to variables, and functions within the template are appropriately evaluated.

- Loading: Post-rendering, the output is a structured YAML file. This YAML structure consists of repeated blocks or parts, each encapsulated into a Python data structure. These parts are characterized by several attributes:

- Name: A clear, human-readable identifier for the part.

- Content: The actual string payload that forms part of the prompt.

- Role (Optional): Specifies the role of the participant, aiding in distinguishing between different users or system components.

- Truncation Priority (Optional): Determines the order of truncation when necessary, with parts having the same priority being truncated in the order in which they appear.

Example: Basic Q&A Bot

- name: system instructions

role: system

content: |

Your name is {{ character_name }} and you are meant to be helpful and never harmful to humans.

- name: user query

role: user

content: |

{{ username}}: {{ user_query }}

- name: response

role: user

content: |

{{ character_name }}:

A basic example of a template for a Q&A bot.

Interpolating Lists

{% for message in current_chat_messages %}

- name: chat_message

role: user

content: |

{{ message.author }}: {{ message.content }}

{% endfor %}If you have elements (e.g. messages) in a list you can parse them into your template like so.

Truncating Old Messages

{% for message in current_chat_messages %}

- name: chat_message

role: user

truncation_priority: 1

content: |

{{ message.author }}: {{ message.content }}

{% endfor %}Context length is limited and can’t always fit the entire chat history– so we can set a truncation priority on the message parts and Prompt Poet will truncate these parts in the order in which they appear (oldest to newest).

Adapting to User Modality

{% if modality == "audio" %}

- name: special audio instruction

role: system

content: |

{{ username }} is currently using audio. Keep your answers succinct.

{% endif %}To tailor instructions based on the user's current modality (audio or text).

Targeting Specific Queries

{% if extract_user_query_topic(user_query) == "homework_help" %}

{% for homework_example in fetch_few_shot_homework_examples(username, character_name) %}

- name: homework_example_{{ loop.index }}

role: user

content: |

{{ homework_example }}

{% endfor %}

{% endif %}To include context-specific examples like homework help when needed.

Handling Whitespace

- name: system instructions

role: system

content: |

Your name is {{ character_name }} and you are meant to be helpful and never harmful to humans.

- name: user query

role: user

content: |

<|space|>{{ username}}: {{ user_query }}

Prompt Poet will strip whitespace by default to avoid unwanted newlines in your final prompt. If you want to include an explicit space use the special built-in space marker “<|space|>” to ensure proper formatting.

Putting It All Together

- name: system instructions

role: system

content: |

Your name is {{ character_name }} and you are meant to be helpful and never harmful to humans.

{% if modality == "audio" %}

- name: special audio instruction

role: system

content: |

{{ username }} is currently using audio modality. Keep your answers succinct and to the point.

{% endif %}

{% if extract_user_query_topic(user_query) == "homework_help" %}

{% for homework_example in fetch_few_shot_homework_examples(username, character_name) %}

- name: homework_example_{{ loop.index }}

role: user

content: |

{{ homework_example }}

{% endfor %}

{% endif %}

{% for message in current_chat_messages %}

- name: chat_message

role: user

truncation_priority: 1

content: |

{{ message.author }}: {{ message.content }}

{% endfor %}

- name: user query

role: user

content: |

{{ username}}: {{ user_query }}

- name: reply_prompt

role: user

content: |

{{ character_name }}:Compositionality is a core strength of Prompt Poet templates, enabling the creation of complex, dynamic prompts.

Decomposing Into Sections

{% include 'sections/system_instruction.yml.j2' %}

{% include 'sections/audio_instruction.yml.j2' %}

{% if extract_user_query_topic(user_query) == "homework_help" %}

{% include 'sections/homework_examples.yml.j2' %}

{% endif %}

{% include 'sections/chat_messages.yml.j2' %}

{% include 'sections/user_query.yml.j2' %}

{% include 'sections/reply_prompt.yml.j2' %}To maintain DRY principles in your templates, break them down into reusable sections that can be applied across different templates, such as when A/B testing a new prompt.

This is just the beginning of what your Prompt Poet templates could do and we’re excited to see what you come up with!

Design Choices

Prompt Poet Library

The Prompt Poet Library provides various features and settings, including prompt properties. Key features like tokenization and truncation help with efficient caching and low latency responses, as explained in Optimizing Inference.

prompt.tokenize()

prompt.truncate(token_limit=TOKEN_LIMIT, truncation_step=TRUNCATION_STEP)

# Inspect prompt as a raw string.

prompt.string: str

>>> "..."

# Inpsect the prompt as raw tokens.

prompt.tokens: list[int]

>>> [...]

# Inspect the prompt as LLM API message dicts.

prompt.messages: list[dict]

>>> [...]

# Inspect the prompt as first class parts.

prompt.parts: list[PromptPart]

>>> [...]Templating Language

Jinja2 and YAML combine to offer an incredibly extensible and expressive templating language. Jinja2 facilitates direct data bindings, arbitrary function calls, and basic control flow within templates. YAML provides structure to our templates (with depth=1) allowing us to perform sophisticated truncation when the token limit is reached. This pairing of Jinja2 and YAML is not unique – most notably it is used by Ansible.

Prompt Portability

At Character.AI we are constantly improving our models to better align them with user preferences. To do this, we need to reconstruct our production prompts inside offline processes such as for eval and post-training workloads. Templatizing our prompts in this way allows us to easily share template files among teams without having to stitch together disparate parts of our–ever evolving–codebase.

Template-native Function Calling

One standout feature of Jinja2 is the ability to invoke arbitrary Python functions directly within templates at runtime. This feature is crucial for on-the-fly data retrieval, manipulation, and validation, streamlining how prompts are constructed. Here `extract_user_query_topic` can perform arbitrary processing of the user's query used in the template's control flow--perhaps by performing a round-trip to a topic classifier.

{% if extract_user_query_topic(user_query) == "homework_help" %}

{% for homework_example in fetch_few_shot_homework_examples(username, character_name) %}

- name: homework_example_{{ loop.index }}

role: user

content: |

{{ homework_example }}

{% endfor %}

{% endif %}

An example of template-native function calling with the use of extract_user_query_topic.

Custom Encoding Function

By default Prompt Poet will use the TikToken “o200k_base” tokenizer although alternate encoding names may be provided in the top-level `tiktoken_encoding_name`. Alternatively, users can provide their own encode function with the top-level `encode_func: Callable[[str], list[int]]`.

from tiktoken import get_encoding

encode_func = get_encoding("o200k_base")

prompt = Prompt(

raw_template=raw_template,

template_data=template_data,

encode_func=encode_func

)

prompt.tokenize()

prompt.tokens

>>> [...]

Passing a custom encoding function to prompt construction.

Truncation

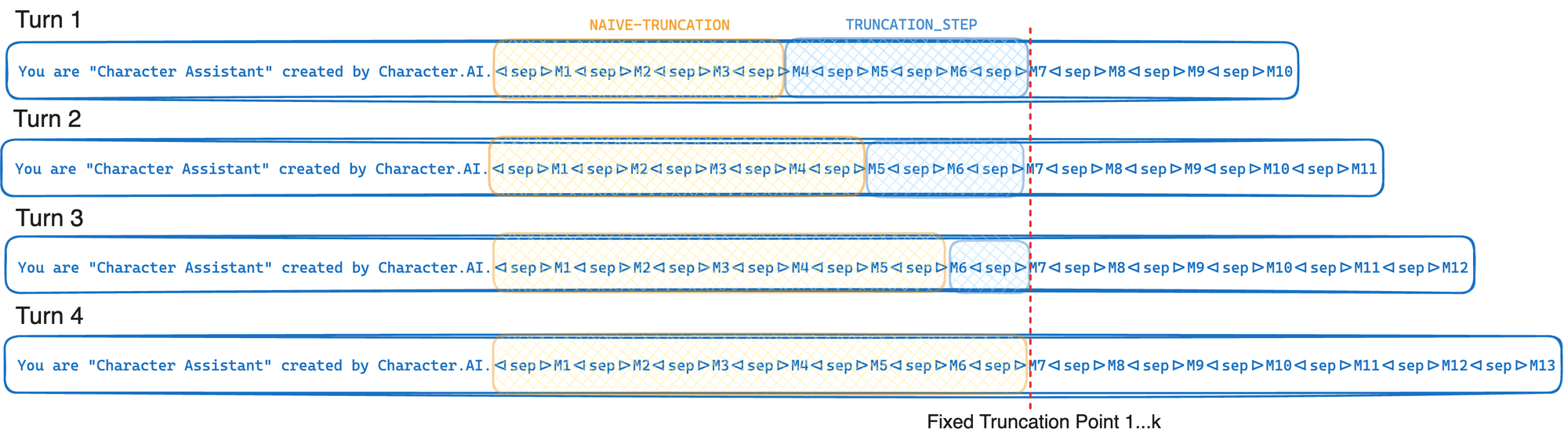

If your LLM provider supports GPU affinity and prefix cache, utilize Character.AI’s truncation algorithm to maximize the prefix-cache rate. The prefix cache rate is defined as the number of prompt tokens retrieved from cache over the total number of prompt tokens. Find the optimal values for truncation step and token limit for your use case. As the truncation step increases, the prefix cache rate also rises, but more tokens are truncated from the prompt.

TOKEN_LIMIT = 128000

TRUNCATION_STEP = 4000

# Tokenize and truncate the prompt.

prompt.tokenize()

prompt.truncate(token_limit=TOKEN_LIMIT, truncation_step=TRUNCATION_STEP)

response = model.invoke(prompt.messages)An example of cache aware prompt truncation with specified token limit and truncation step.

Cache Aware Truncation Explained

Our truncation strategy plays a significant role in allowing us to achieve an impressive 95% cache rate by optimizing the way messages are truncated from the prompt. In short, every time we truncate we do so up to a fixed truncation point–only moving this truncation point on average every k turns. This allows us to maximally exploit GPU prefix cache described in Optimizing Inference. If instead we simply truncated until reaching the token limit (L) this truncation point would move every turn. The tradeoff in this approach is that we often truncate more than we strictly need to.

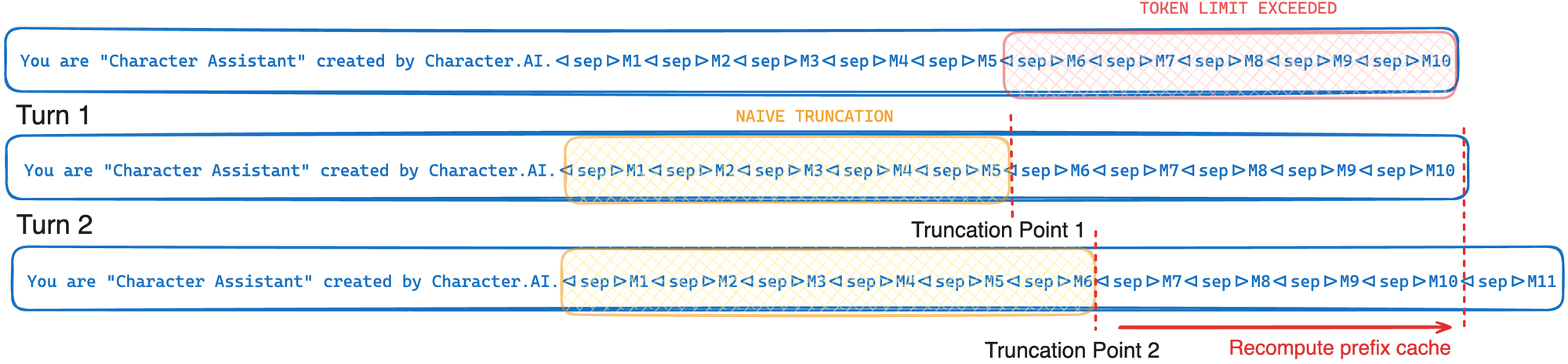

Naive Truncation

Consider the following inter-turn example where M1…M10 are current messages in a given chat. If we naively truncate to just below the token limit our truncation point shifts every turn, leaving only a small portion of the prefix to be retrieved from the cache, resulting in significant recomputation costs.

Cache-aware Truncation

Character.AI's cache-aware truncation algorithm truncates up to the same fixed truncation point for every k turns. This means that the sequence of tokens remains unbroken up until the most recent message allowing us to reuse computations stored in GPU prefix cache from the previous turn. Note that k is not directly controllable but is a function of the truncation step and the average number of tokens per message being truncated.

Conclusion

Prompt Poet represents a significant leap forward in the field of prompt engineering, shifting the focus from cumbersome manual string manipulations to a more streamlined and intuitive design-focused approach. By leveraging principles of UI design and applying them to prompt construction, this tool simplifies the creation of complex and personalized prompts enhancing the quality of interactions between users and AI models.

With Prompt Poet, both developers and non-technical users are empowered to focus more on prompt design and less on prompt engineering. This shift towards design over engineering holds the potential to reshape how we interact with AI, making these interactions more efficient, intuitive, and aligned with user needs. As we continue to explore the capabilities of large language models and expand their applications, tools like Prompt Poet will play a crucial role in harnessing their full potential in user-centric ways.

Related Work

- Priompt: Priompt (priority + prompt) is a JSX-based prompting library. It uses priorities to decide what to include in the context window. This project achieves a similar goal in separating a templating layer from a logical construction layer written in and compatible with TypeScript-based usage.

- dspy: Provides a great way of automagically optimizing prompts for different models though lacks deterministic control of the prompt important for things like caching and high-throughput, low latency production systems.

- Prompt Engine: Born from a common problem of production prompt engineering requiring substantial code to manipulate and update strings this Typescript package similarly adds structure to the prompt templating process– though comes across as being somewhat opinionated making assumptions based on the use cases. With last commits being from 2 years ago it does not seem as though this package is in active development.

- llm: Allows basic prompts to be defined in YAML with the Jinja2 enabled features like dynamic control flow, function calling and data bindings.

- Raw Python f-strings: There are several projects that take slightly different approaches to prompt templating by wrapping f-strings:

- LangChain: LangChain has a much larger scope than prompt templates though it does provide some basic templating abstractions. Good for simple templating use cases then starts to get unwieldy as prompts increase in complexity.

- LlamaIndex: Like LangChain, LlamaIndex has a much larger scope than prompt templates though it also provides some basic templating abstractions.

- Mirascope: Implements a novel approach to prompt templating by encapsulating everything in a single python class and using the class’s docstring as the f-string into which to bind data.